Creating the Future of Autonomous Car Interfaces

HARMAN,

a Samsung Company

Digital interface that allows users to influence crucial elements of autonomous driving

ROLE

Design, UX/UI Lead + Overall project management

[ 6-person team ]

TIMELINE

January - August 2018

METHODS

Lo-fi & Hi-fi prototyping in digital & physical, simulated autonomy (Wizard of Oz) experiment, UX strategy, generative/market/industry analysis & research

PROBLEM

Users of self-driving mode in cars experience anxiety and discomfort during the ride because the AI's driving style is not matching their own. If users want to change anything, they have to go through a disjointed process of taking the controls back. Drivers are apprehensive about interacting with AI systems because they don't know what it's doing or why.

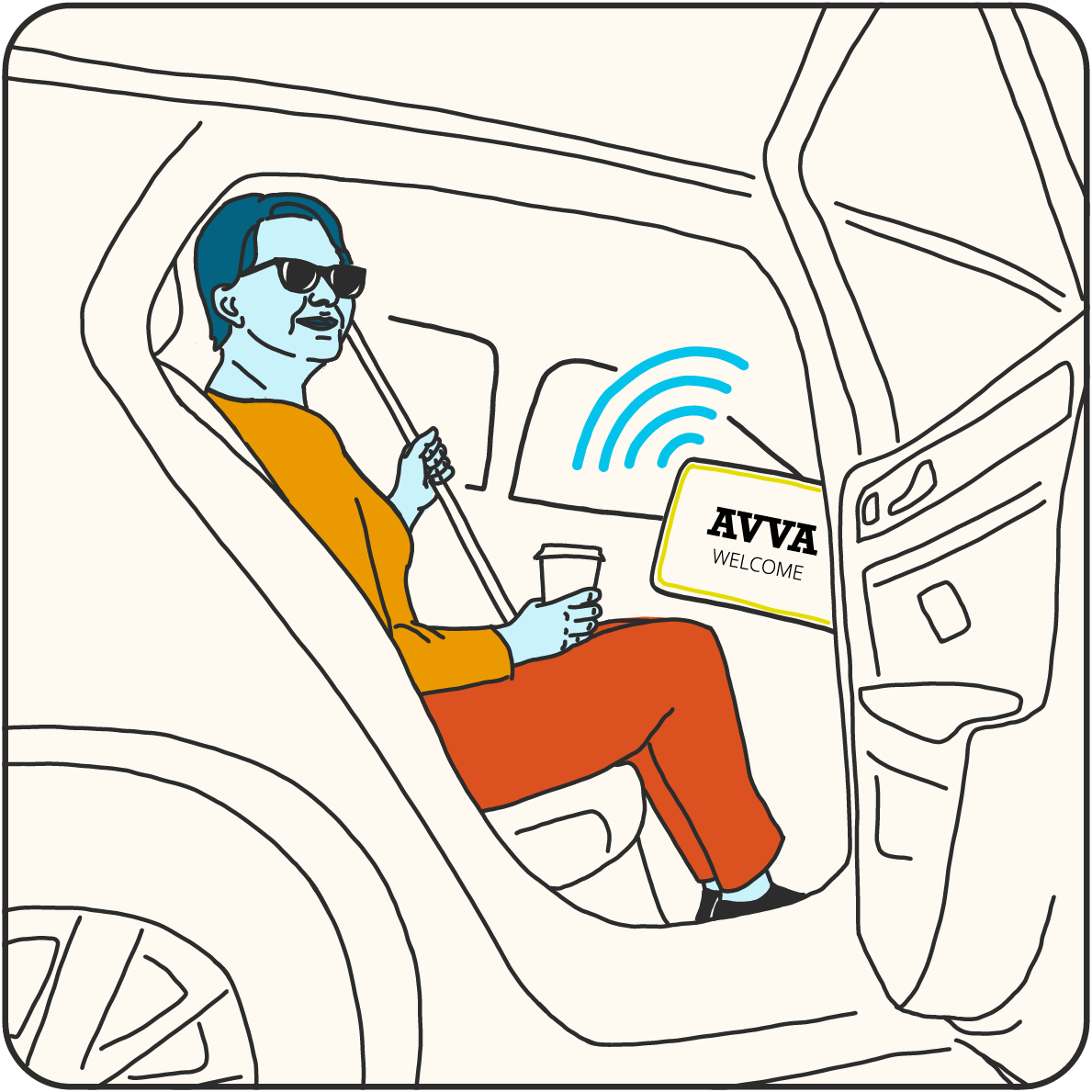

SOLUTION

Creation of a unique, co-driving product that allows users to control key aspects of the autonomous ride without having to switch out of self-driving mode, forming a human + AI collaborative system. The screen can be implemented in any self-driving car, increasing users’ comfort with autonomy.

OUTCOME

Our design and research is currently being implemented by Samsung and its subsidiaries - look for it at CES 2020.

Illustrated view of the curved interface system positioned inside of a car, replacing the existing center console.

Final fully functioning prototype, for usability testing and for implementation by HARMAN.

PROCESS

We started with user research by creating a Wizard of Oz experiment to observe how people interact with autonomy. Insights from research led to the creation of series of product prototypes (from paper prototypes and 3D models, to fully functioning proof-of-concept). Design of the extensive UX and UI system was user tested on a self-driving simulator for concepts and design validation.

FINDINGS

Users want autonomous vehicles to drive like they do, not like a machine. The best experience in a co-driving system is created when the user has agency, throughout the drive.

Giving users the right amount of control over autonomy creates a positive and comfortable experience with self-driving cars. Users want to trust and engage with autonomy, as well as use autonomous modes more frequently.

Human-AI Collaborative System

Users can control a car’s actions within autonomy

To create comfort in driving, we allow users to influence the operations of the vehicle so that it can drive less like a machine, and more like they would. From our primary research insights we identified three main use cases that users wanted to alter in autonomous driving:

Speeding Up & Slowing Down

Shifting the Car’s Position Within the Lane

Situational ‘Go’ or ‘Don’t Go’ Decisions

FINAL PROOF OF CONCEPT DEMONSTRATION

Giving users control over space & distance

PROXIMITY PREFERENCE

How far the car is from objects on the road —distance to medians, other vehicles, oncoming traffic, lane keeping.

CONTEXTUAL DECISIONS

The situationally aware AI asks for a decision from the human about binary options like passing a bus or taking a blind turn.

HUMAN + AI RELATIONSHIP

The system of communication between the human and the AI creates a space for the human to have an area of input, and for the AI to respond back through its own space.

Creating Unique Interaction Patterns

Indirect, Loose-Reign control

As we uncovered mental models of what it means to control a car, we found that users think of driving as a direct input. However, user adjustments take place within the umbrella of autonomous driving mode, they don’t have the same degree of control as they would with manual driving. The unique interaction pattern and the defined S-curved shape reinforce indirect control by feeling familiar, yet distinctive at the same time.

Swipes not taps

Manual driving is currently all about a direct 1:1 system of engagement (tight-reign). The curved screen is designed for loose rein, indirect control, for which we created an interaction pattern that de-emphasizes precision and does not include any taps, buttons, or direct presses, but only swipes.

CONTROL WITHOUT LOOKING

These gestures can be made anywhere, with the whole screen acting as an activation area. Accurate touching isn’t required.

Detailed swipe interaction for changing speed and lane keeping.

Detailed swipe interaction accepting a binary option presented by the AI.

Leveraging driving metaphors

We designed the curved screen to mimic the metaphor of current drivings tasks. The shape creates a physical affordance that allows users to feel how far they’ve adjusted movement. Swiping up to speed up is the press on the gas pedal. Swiping left or right is the turn of the steering wheel.

SIMPLE DECISION MAKING

When reacting to options, users can push a confirmation toward the AI, or pull it back to dismiss. The shape of the curve and the swipe threshold makes sure that the intention doesn’t get triggered by accident.

Familiar feedback

The ‘fill-to-target’ progress fill leverages a familiar concept from apps that uses the secondary screen to show how the car executes the user’s command.

TRANSPARENCY THROUGH OPTIONS

The AI lives in the space of the secondary screen

through which it communicates that it is aware of the environment. During Go or Don’t Go decisions, it shows it understands that the human may want to input their preference over the situation.

The secondary screen shows feedback of the car complying to user speeding up.

Research & Analysis:

Exploring the State of Self-Driving

Understanding a Future User

Our mission was to observe how people’s reaction is to being driven around by an AI, what makes people trust or distrust the AI, and what would people miss about driving.

Wizard-of-Oz (WoZ) Ride Along Experiment

In-car observation experiment of participants inside a simulated autonomous vehicle

Tesla Owner Interviews

Closest population to autonomy — pain points with AutoPilot, why they do/do not use it

Car Enthusiast Interviews

Diving into people’s relationship with their cars

Simulating autonomy with a custom WoZ experiment

Participants were driven around in a car with simulated autonomy on a predetermined route with incidents along the way. Most believed they were being driven by an AI; their reactions were turned into data points to uncover mental models and insights.

Even though full autonomy is supposed to be infallible, our research participants were still visibly uncomfortable with self-driving operations, and Tesla owners found themselves anxious when in AutoPilot.

Blocked from seeing the steering wheel, users naturally reacted to the AI’s handling of incidents on the route.

A common misconception is that autonomy is binary

But it’s a spectrum, human supervision is still required

Research analysis uncovered that people’s mental models of self-driving cars are fully robotic “living room on wheels” where they can fully disengage from the road. Interviewing stakeholders, industry experts, and diving into available research informed our understanding of how people think about the spectrum of autonomy where the industry is heading.

DESIGNING FOR PARTIAL AUTONOMY

The reality is that riders cannot fully stop paying attention to the road. Self-driving cars still require that drivers takeover control in specific instances. As technology improves, so will the AI, but realistically the next 5-10 years of production involve co-driving.

Users Feel Powerless Over Autonomy

Specific moments, handled well, define the whole experience

During the WoZ experiment, participants immediately compared the AI’s decisions to how they would handle that situation. Further analysis revealed that participant’s wishes during complex scenarios could ultimately be distilled into go/don’t go actions for the car itself. Participants likened their experience to driving on rails with no control over their destiny.

Users want self-driving cars to handle situations like they would - not like machines.

Prototyping & User Testing:

Insights Into Opportunities

HOW MIGHT we give users control?

The best experience in a co-driving system is created when a user has agency throughout the ride

Following our discovery period of research, we understood that predicting how an individual will want to react to a situation is near impossible. A rider’s driving preference relies on interdependent factors: usual driving style, weather, who’s in the car, and mood. All of these are multiplied across each individual driving scenario. We have to give riders agency to influence the ride to match what they would do.

Deep dive into our prototyping process in the full case study.

Generating ideas using round-robin visioning, sketching interaction concepts, coming up with pretty far out ideas, and reigning back in during feasibility and risk assessment discussions

Digital control offers the most versatility

Adapting to scenarios requires flexible modality

Knowing that designing a new product with dependent variables is akin to the chicken and egg problem, we split out each variable into a set of useability tests. From low fidelity models, to high fidelity interactive prototypes mapped to a simulator - each result guided us to build the next element.

Physical affordances mapped to mental model

Starting with simple interactions, we gauged user’s understanding of the concept. Creating a physical connection through the curve emphasized the level of control.

Physical models testing, using a flat screen as a control variable.

Giving the Right Amount of Transparency

Testing visuals to determine the level of visual affordances and signifiers that needed to be placed onto the user' input area. Although iconographic reinforcement did not improve comprehension of the car’s actions, adding text made intent much clearer.

Parallel prototyping with different visual and interaction systems.

Cohesive Form and Design Language

With a fully interactive prototype created in Framer, we used an iPad to control the simulator. Swipes and visuals were refined, along with the physical positioning of the curved screen.

The final prototype is a Framer module projected onto plexiglass screen that controls the simulator, that is linked to a secondary iPad screen.

Second to last rear projection prototype with computer vision and a link to the simulator.

MOST USERS STATED THEY WOULD ABSOLUTELY USE THIS INSTEAD OF CURRENTLY AVAILABLE OPTIONS

“I don’t like having to intervene and then re-engage the car’s self-driving...I would rather make a decision and direct the car...I would definitely rather use [this] control.”

Tesla Autopilot User

Usability Testing Participant

Productization & Roadmap

Human + AI Relationship Through Design

Bringing Understanding and Comfort to AI Interactions

TRANSPARENCY THROUGH COMMUNICATION

No more feeling powerless during autonomous driving and confusion over the AI’s actions.

REDUCED ANXIETY

Increased control imbues a sense of safety. Customizing a ride makes users feel infinitely more comfortable.

MORE TRUST = MORE TIME IN AUTONOMY

As the car learns user’s preferences, drivers will be far more inclined to use autonomous mode more frequently.

Technical Feasibility & Integration

Intervention within the Sphere of Safety

Self-driving mode is safe, and the car will do what it think is appropriate for the situation. Letting the user have a degree of control over autonomy lives within that sphere and allows for interventions within the thresholds of safety and security.

Diagram showing the simplified decision flow between when the AI interprets it’s surroundings, and executes the appropriate action.

Other Projects